PBS Newshour 12/27/2010

Tuesday, December 28, 2010

INTERNET - Tracking Your Browsing

PBS Newshour 12/27/2010

Tuesday, December 21, 2010

NETWORK - Internet Access, NET Neutrality

"F.C.C. Is Set to Regulate Net Access" by BRIAN STELTER, New York Times 12/20/2010

Excerpt

UPDATE

"F.C.C. Approves Net Rules and Braces for Fight" by BRIAN STELTER, New York Times 12/21/2010

Excerpt

Excerpt

The Federal Communications Commission appears poised to pass a controversial set of rules that broadly create two classes of Internet access, one for fixed-line providers and the other for the wireless Net.

The proposed rules of the online road would prevent fixed-line broadband providers like Comcast and Qwest from blocking access to sites and applications. The rules, however, would allow wireless companies more latitude in putting limits on access to services and applications.

Before a vote set for Tuesday, two Democratic commissioners said Monday that they would back the rules proposed by the F.C.C. chairman, Julius Genachowski, which try to satisfy both sides in the protracted debate over so-called network neutrality. But analysts said the debate would soon resume in the courts, as challenges to the rules are expected in the months to come.

Net neutrality, broadly speaking, is an effort to ensure equal access to Web sites and cutting-edge online services. Mr. Genachowski said these proposed rules aimed to both encourage Internet innovation and protect consumers from abuses.

“These rules fulfill a promise to the future — to companies that don’t yet exist, and the entrepreneurs that haven’t yet started work in their dorm rooms or garages,” Mr. Genachowski said in remarks prepared for the commission’s meeting on Tuesday in Washington. At present, there are no enforceable rules “to protect basic Internet values,” he added.

Many Internet providers, developers and venture capitalists have indicated that they would accept the proposal by Mr. Genachowski, which Rebecca Arbogast, a regulatory analyst for Stifel Nicolaus, a financial services firm, said “is by definition a compromise.”

UPDATE

"F.C.C. Approves Net Rules and Braces for Fight" by BRIAN STELTER, New York Times 12/21/2010

Excerpt

Want to watch hours of YouTube videos or sort through Facebook photos on the computer? Your Internet providers would be forbidden from blocking you under rules approved by the Federal Communications Commission on Tuesday. But if you want to do the same on your cellphone, you may not have the same protections.

The debate over the rules, intended to preserve open access to the Internet, seems to have resulted in a classic Washington solution — the kind that pleases no one on either side of the issue. Verizon and other service providers would prefer no government involvement. Public interest advocates think the rules stop far short of ensuring free speech.

Labels:

FCC,

net neutrality,

networking,

web

Sunday, December 19, 2010

WINDOWS - Fix-It Solution Center

Due to a problem on my home system I was reminded of a site for fixing Windows problems.

Microsoft Fix it Solution Center

(click for better view)

(click for better view)

For example, what I used to fix my problem was the top option (screenshot):

Diagnose and repair Windows File and Folder Problems automatically

The downloaded file installed PowerShell and ran the tool. I selected Other from the checkbox list and continued. The tool found that my Recycle Bin was corrupted (my problem) AND fixed it. Then I rebooted.

Have a Windows problem? Try it.

(click for better view)

(click for better view)For example, what I used to fix my problem was the top option (screenshot):

Diagnose and repair Windows File and Folder Problems automatically

The downloaded file installed PowerShell and ran the tool. I selected Other from the checkbox list and continued. The tool found that my Recycle Bin was corrupted (my problem) AND fixed it. Then I rebooted.

Have a Windows problem? Try it.

Thursday, December 16, 2010

COMPUTERS - Air Force Supercomputer

"Air Force Uses PS3 Game Consoles to Build Supercomputer" by Brian Kalish, NextGov 12/16/2010

Video game consoles are now more than just for fun. An Air Force supercomputer, built from off-the-shelf components, includes 1,716 PlayStation 3 game consoles.

The machine, known as the Condor Cluster, is estimated to be one of the greenest computers in the world. And if that wasn't enough, it also is the 35th or 36th fastest computer in the world, said Mark Barnell, director of high performance computing and the Condor Cluster project at the Air Force Research Laboratory, reported Government Computer News.

One of the main reasons to use PS3 processors was cost. Condor cost about $2 million to build, compared to $50 million to $80 million for a similar supercomputer, the Air Force said in a news release.

The computer also can read 20 pages of information per second, which makes it about 50,000 times faster than the average laptop, CNET reported.

Initial tasks for the machine, located in Rome, N.Y., include neuromorphic artificial intelligence research, in which programmers will teach the computer to read symbols, letters, words and sentences so it can fill in human gaps and correct human errors, CNET reported.

Labels:

computers,

super computers

Tuesday, December 14, 2010

SECURITY - User's Bad Habits

PBS Newshour 12/13/2010

Excerpts from transcript

JEFFREY BROWN (Newshour): All right, we talk about this group called Gnosis. How much do we know about what -- who they are? And what did they do to Gawker?

HARI SREENIVASAN, staff writer, Wired.com: Well, a lot of these sort of hacker groups are very shadowy in nature, in the sense that they -- there's no card-carrying membership that says, I'm part of this club. I'm the one who did this, and here is my address and phone number.

So, really, what they did to Gawker was come in behind the scenes in the past few weeks, past few months, figure out vulnerabilities, and essentially start to take the keys to the kingdom. Everything that Gawker held dear, most important, the user information, they took all of that out and splayed it out across the Internet.

They didn't hide the information for themselves for some sort of kind of nefarious means. They said, here, take it, because this is really -- they're the crown jewels for a website.

----

JEFFREY BROWN: Now, how are those people affected, in what ways?

HARI SREENIVASAN: Well, so, the thing -- it kind of gets back to a little bit of social engineering.

So a lot of times people don't make separate passwords and separate usernames for different websites. Sometimes, they use the same website or same e-mail address that I have for work on to a site like Gawker, and then maybe that's the same password that gets me into Facebook, and then it's also connected to Twitter.

So, as we see all of these different kind of communities that we participate in during the day, people aren't very good at keeping these walls separate. So, that's where the real influence is.

Bold-blue emphasis mine

Labels:

networking,

pc security,

web

Thursday, December 9, 2010

SOFTWARE - More on Open Office

"The Legacy of OpenOffice.org" from Open Source 11/7/2010

Minor edit of list mine

When I hear the word “fork”, I reach for my gun. OK. Maybe it is not that bad. But in the open source world, “fork” is a loaded term. It can, of course, be an expression of a basic open source freedom. But it can also represent “fighting words”. It is like the way we use the term “regime” for a government we don’t like, or “cult” for a religion we disapprove of. Calling something a “fork” is rarely intended as a compliment.

So I’ll avoid the term “fork” for the remainder of this post and instead talk about the legacy of one notable open source project, OpenOffice.org, which has over the last decade spawned numerous derivative products, some open source, some proprietary, some which fully coordinate with the main project, others which have diverged, some which have prospered and endured for many years, others which did not, some which tried to offer more than OpenOffice, and others which attempted, intentionally, to offer less, some which changed the core code and other which simply added extensions.

If one just read the headlines over the past month one would get the mistaken notion that LibreOffice was the first attempt to take the OpenOffice.org open source code and make a different product from it, or even a separate open source project. This is far from true. There have been many spin-off products/projects, including:I’ve tracked down some dates of various releases of these projects and placed them on a time line above. (see full article)

- StarOffice (with a history that goes back even further, pre-Sun, to StarDivision)

- Symphony

- EuroOffice

- RedOffice

- NeoOffice

- PlusOffice

- OxygenOffice

- PlusOffice

- Go-OO

- Portable OpenOffice

- and, of course, LibreOffice

So before we ring the death knell for OpenOffice, let’s recognized the potency of this code base, in terms of its ability to spawn new projects. LibreOffice is the latest, but likely not the last example we will see. This is a market where “one size fits all” does not ring true. I’d expect to see different variations on these editors, just as there are different kinds of users, and different markets which use these kinds of tools. Whether you call it a “distribution” or a “fork”, I really don’t care. But I do believe that the only kind of open source project that does not spawn off additional projects like this is a dead project.

Minor edit of list mine

Labels:

OpenOffice,

software

Thursday, December 2, 2010

SECURITY - FTC Changes Stance on Internet Privacy

"F.T.C. Backs Plan to Honor Privacy of Online Users" by EDWARD WYATT and TANZINA VEGA, New York Times 12/1/2010

Excerpt

Excerpt

Signaling a sea change in the debate over Internet privacy, the government’s top consumer protection agency on Wednesday advocated a plan that would let consumers choose whether they want their Internet browsing and buying habits monitored.

Saying that online companies have failed to protect the privacy of Internet users, the Federal Trade Commission recommended a broad framework for commercial use of Web consumer data, including a simple and universal “do not track” mechanism that would essentially give consumers the type of control they gained over marketers with the national “do not call” registry.

Those measures, if widely used, could directly affect the billions of dollars in business done by online advertising companies and by technology giants like Google that collect highly focused information about consumers that can be used to deliver personalized advertising to them.

While the report is critical of many current industry practices, the commission will probably need the help of Congress to enact some of its recommendations. For now, the trade commission hopes to adopt an approach that it calls “privacy by design,” where companies are required to build protections into their everyday business practices.

Labels:

internet,

pc security

Monday, November 22, 2010

SECURITY - On the Liter Side, CIA Stumped?

(click for better view)

(click for better view)"Clues to Stubborn Secret in C.I.A.’s Backyard" by JOHN SCHWARTZ, New York Times 11/20/2010

Excerpt

It is perhaps one of the C.I.A.’s most mischievous secrets.

“Kryptos,” the sculpture nestled in a courtyard of the agency’s Virginia headquarters since 1990, is a work of art with a secret code embedded in the letters that are punched into its four panels of curving copper.

“Our work is about discovery — discovering secrets,” said Toni Hiley, director of the C.I.A. Museum. “And this sculpture is full of them, and it still hasn’t given up the last of its secrets.”

Not for lack of trying. For many thousands of would-be code crackers worldwide, “Kryptos” has become an object of obsession. Dan Brown has even referred to it in his novels.

The code breakers have had some success. Three of the puzzles, 768 characters long, were solved by 1999, revealing passages — one lyrical, one obscure and one taken from history. But the fourth message of “Kryptos” — the name, in Greek, means “hidden” — has resisted the best efforts of brains and computers.

And Jim Sanborn, the sculptor who created “Kryptos” and its puzzles, is getting a bit frustrated by the wait. “I assumed the code would be cracked in a fairly short time,” he said, adding that the intrusions on his life from people who think they have solved his fourth puzzle are more than he expected.

So now, after 20 years, Mr. Sanborn is nudging the process along. He has provided The New York Times with the answers to six letters in the sculpture’s final passage. The characters that are the 64th through 69th in the final series on the sculpture read NYPVTT. When deciphered, they read BERLIN.

But there are many steps to cracking the code, and the other 91 characters and their proper order are yet to be determined.

“Having some letters where we know what they are supposed to be could be extremely valuable,” said Elonka Dunin, a computer game designer who runs the most popular “Kryptos” Web page.

Note, there is a multimedia link for a hint on the article page.

Labels:

data security,

humor

Tuesday, November 9, 2010

WINXP - My Documents Folder

I was answering a question on the Computer Help Forum (sidebar) when I realized that this subject should be posted here.

This has to do with the location of your My Documents folder. Specifically if you want to move it to another hard drive. This is specially handy if your C: is getting a bit tight (full) AND you have a second hard drive (like D:) has enough free-space.

Note that this move is transparent in the use of [Start] menu, Documents. After moving your My Documents folder, the menus get you to the correct folder.

Note that for this post I will use C: and D:

Lets start with some background info. There are a set of special folders in My Documents that have a Special Folder Icon (see screenshot) like My Music or My Pictures.

There are 2 ways to move your My Documents folder:

For Multi-User system (more that one user logs on)

Any user can drag-drop their My Documents folder from C: to D:

You SHOULD get a set of folders in D:, here's where those Special Folder Icons come in.

For user John Doe, you something like:

The same happens for any other user that is logged-on and moves their My Documents folder, but with their logon name.

Single-User system

Here is how I moved the My Documents folder on my home system

ORDER OF STEPS IS IMPORTANT

Regardless of which method you use, you now should test to see if it worked.

If things went correctly, the MyDocsPathTest.txt should be in D:\My Documents but NOT in C:\....\My Documents.

At this point you can delete the contents of C:\....\My Documents, OR the entire folder. In my home system I deleted the My Documents on C:

This has to do with the location of your My Documents folder. Specifically if you want to move it to another hard drive. This is specially handy if your C: is getting a bit tight (full) AND you have a second hard drive (like D:) has enough free-space.

Note that this move is transparent in the use of [Start] menu, Documents. After moving your My Documents folder, the menus get you to the correct folder.

Note that for this post I will use C: and D:

Lets start with some background info. There are a set of special folders in My Documents that have a Special Folder Icon (see screenshot) like My Music or My Pictures.

There are 2 ways to move your My Documents folder:

For Multi-User system (more that one user logs on)

Any user can drag-drop their My Documents folder from C: to D:

- In Explorer (aka My Computer) find your My Documents folder (in C:\Documents and Settings\your-profile-name)

- Open another Explorer window on D:

- Now drag your My Documents folder from C: to D:

You SHOULD get a set of folders in D:, here's where those Special Folder Icons come in.

For user John Doe, you something like:

- John Doe's Documents

- John Doe's Pictures

The same happens for any other user that is logged-on and moves their My Documents folder, but with their logon name.

Single-User system

Here is how I moved the My Documents folder on my home system

ORDER OF STEPS IS IMPORTANT

- Created D:\My Documents

- COPIED the full contents of C:\Documents and Settings\my-profile-name\My Documents to D:\My Documents, DO NOT DRAG/DROP

- IMPORTANT - use the Registry Editor to change my Document Path (see screenshot)

- REBOOT

(click for readable view)

(click for readable view)Regardless of which method you use, you now should test to see if it worked.

- Click [Start], Documents to open your My Documents folder

- Now find a simple file you saved before (suggest a text file) and open it

- Now use the File menu, Save As, and rename the file (example MyDocsPathTest.txt), save

If things went correctly, the MyDocsPathTest.txt should be in D:\My Documents but NOT in C:\....\My Documents.

At this point you can delete the contents of C:\....\My Documents, OR the entire folder. In my home system I deleted the My Documents on C:

Labels:

My Documents,

windows,

winxp

Wednesday, November 3, 2010

SECURITY - Courier CD

Where I work (IT Tech) our software engineers have to transport classified code to/from customers, and using USB drives is not authorized. A solution was agreed to by a customer, one that is simple and does not require expensive software.

When I became aware of the method used I thought that this could be used by anyone who wishes to transport any data files on a CD.

Courier CD System:

The first requirement is to have a good ZIP utility (like WinZIP) that is capable of password protection of ZIPed files.

To create a Courier CD

Why the double ZIP?

When you ZIP a set of files with a password, anyone with a ZIP utility can see the directory of the contents. They just cannot extract the files without the password. The double ZIP will allow only (in this example) wrapper.zip to be seen.

Now you can transport the Courier CD without the worry that the files can be compromised if you loose the CD.

When I became aware of the method used I thought that this could be used by anyone who wishes to transport any data files on a CD.

Courier CD System:

The first requirement is to have a good ZIP utility (like WinZIP) that is capable of password protection of ZIPed files.

To create a Courier CD

- ZIP your files into a first file, and give it a filename that does not reveal what it contains

- ZIP container.zip into a second file, with password protection

- Now write wrapper.zip to a CD

Example: container.zip

Example: wrapper.zip

Why the double ZIP?

When you ZIP a set of files with a password, anyone with a ZIP utility can see the directory of the contents. They just cannot extract the files without the password. The double ZIP will allow only (in this example) wrapper.zip to be seen.

Now you can transport the Courier CD without the worry that the files can be compromised if you loose the CD.

Labels:

data security,

pc security,

windows

Thursday, October 28, 2010

COMPUTERS - The Really, Really FAST Supercomputer

"Supercomputer in China super fast: U.S. computers feel inadequate" by Melissa Bell, Washington Post Blog 10/28/2010

They own all sorts of credit lines with the world. They make our light bulbs. Both the Republicans and the Democrats are pretty sure they'll take us over by 2030.

And now, China may have the world's fastest supercomputer.

On Thursday, China unveiled the Tianhe-1A, out-powering the previous supercomputer record holder, the Cray XT5 Jaguar, by computing at a rate 43 percent higher.

The computer is "another sign of the country's growing technological prowess that is likely to set off alarms about U.S. competitiveness and national security," Don Clark at the Wall Street Journal writes.

Here's what Mashable has to say about it:

Tianhe-1A was designed by the National University of Defense Technology (NUDT) in China, and it is already fully operational. To achieve the new performance record, Tianhe-1A uses 7,168 Nvidia Tesla M2050 GPUs and 14,336 Intel Xeon CPUs. It cost $88 million; its 103 cabinets weigh 155 tons, and the entire system consumes 4.04 megawatts of electricity.

To put that in Luddite speak: The computer is fast. Really, really fast.

A petaflop is the measure of one thousand trillion operations per second or (ops). Most consumer computers are lucky to get a few billion operations per second.

In computing, speed is of the utmost importance. Most supercomputers are used for the toughest scientific problems, such as simulating drug products and designing weapons.

The computer, though constructed in China, still uses materials sourced from Intel and Nvidia, two California-based companies. One major shift in the computer's construction is its use of Nvidia chips -- the graphic chips are more commonly found in video games instead of computers.

Although some see the release of the computer as an affront to the U.S. lead in computing, others see it as a "wake-up call," as Jack Dongarra, a supercomputer expert told the Wall Street Journal, similar to when Japan released the Earth Simulator supercomputer in 2002. It took the U.S. two years to regain the crown.

Plus, there could be more surprises in store. The Top 500 list of supercomputers around the world has yet to be announced. A computer faster than the Tianhe could be unveiled before the list is released in two weeks.

Labels:

computers,

super computers

Monday, October 25, 2010

SOFTWARE - Oracle's NON-Support of OpenOffice

"Oracle Demonstrates Continued Support for OpenOffice.org" Oracle Press Release 10/13/2010

Bold-blue emphasis mine

Typical corporate behavior. NOT understanding that OpenOffice.org WAS an open-source community, NOT corporate owned. They think their engineers can do a better job than the open-source community that originated the software, the same arrogance that Microsoft demonstrates.

Note my previous post on this subject.

News Facts

- Further demonstrating its commitment to the OpenOffice.org community, Oracle today announced that it is participating in the ODF Plugfest, being held in Brussels, October 14-15.

- On the fifth anniversary of the Open Document Format (ODF) becoming an International Standard, Oracle applauds the community and OASIS for its efforts and renews its commitment to the ODF-based OpenOffice.org productivity suite.

- Oracle’s growing team of developers, QA engineers, and user experience personnel will continue developing, improving, and supporting OpenOffice.org as open source, building on the 7.5 million lines of code already contributed to the community.

- Oracle demonstrates its commitment to OpenOffice.org with new versions of this free product in collaboration with the community – OpenOffice.org 3.2.1 and OpenOffice.org 3.3 Beta – both representing advances in features and performance advancements with the introduction of new tools and extensions. Significant community contributions include localization, quality assurance, porting, documentation and user experience.

- Oracle’s ongoing support for OpenOffice.org reinforces its commitment to developing software based on open standards, providing IT users with flexibility, lower short and long-term costs and freedom from vendor lock-in.

- By investing significant resources in developing, testing, optimizing, and supporting other open source technologies such as MySQL, GlassFish, Linux, PHP, Apache, Eclipse, Berkeley DB, NetBeans, VirtualBox, Xen, and InnoDB, Oracle is invested in their future development and contributing back to the communities that produce it.

- With more than 100 million users, OpenOffice.org is the most advanced, feature-rich open source productivity suite, and continued contributions through www.openoffice.org will only improve this already popular software. Oracle views ODF as critical to providing OpenOffice.org with a complete, open, and modern document format, empowering interoperability and choice on the desktop.

- Oracle invites community participation in the OpenOffice.org conference, ODF Plugfests, and discussion groups, and welcomes contributions to the code base.

Bold-blue emphasis mine

Typical corporate behavior. NOT understanding that OpenOffice.org WAS an open-source community, NOT corporate owned. They think their engineers can do a better job than the open-source community that originated the software, the same arrogance that Microsoft demonstrates.

Note my previous post on this subject.

Labels:

LibreOffice,

linux,

OpenOffice,

software,

ubuntu,

win xp,

windows

Tuesday, October 19, 2010

WINXP - Utility Recommendations

Have 2 new Utilities to recommend.

- WinBootInfo from GreenVantage

- Icon Phile (freeware)

(click for better view)

(click for better view)The screenshot shows you most of what you need to know about this utility (especially the summary block in the middle). The printed report is just as good.

There is one drawback. GreenVantage uses a funky authorization key process and getting them to answer is a hassle.

This is an oldie but goody. Allows you to change icons shown by Explorer (My Computer) even those that normally cannot be changed (like the icon for TXT file types).

The screenshots on their page says it all.

NOTE: The download is a ZIP file that contains the entire utility. You copy or extract the contents to a folder of your choice (C:\Program Files\IconPhile for example), then create a shortcut to run it.

Monday, October 11, 2010

SECURITY - HTML 5 a Privacy Threat?

"New Web Code Draws Concern Over Risks to Privacy" by TANZINA VEGA, New York Times 10/10/2010

Excerpt

Excerpt

Worries over Internet privacy have spurred lawsuits, conspiracy theories and consumer anxiety as marketers and others invent new ways to track computer users on the Internet. But the alarmists have not seen anything yet.

In the next few years, a powerful new suite of capabilities will become available to Web developers that could give marketers and advertisers access to many more details about computer users’ online activities. Nearly everyone who uses the Internet will face the privacy risks that come with those capabilities, which are an integral part of the Web language that will soon power the Internet: HTML 5.

The new Web code, the fifth version of Hypertext Markup Language used to create Web pages, is already in limited use, and it promises to usher in a new era of Internet browsing within the next few years. It will make it easier for users to view multimedia content without downloading extra software; check e-mail offline; or find a favorite restaurant or shop on a smartphone.

Most users will clearly welcome the additional features that come with the new Web language.

“It’s going to change everything about the Internet and the way we use it today,” said James Cox, 27, a freelance consultant and software developer at Smokeclouds, a New York City start-up company. “It’s not just HTML 5. It’s the new Web.”

But others, while also enthusiastic about the changes, are more cautious.

Most Web users are familiar with so-called cookies, which make it possible, for example, to log on to Web sites without having to retype user names and passwords, or to keep track of items placed in virtual shopping carts before they are bought.

The new Web language and its additional features present more tracking opportunities because the technology uses a process in which large amounts of data can be collected and stored on the user’s hard drive while online. Because of that process, advertisers and others could, experts say, see weeks or even months of personal data. That could include a user’s location, time zone, photographs, text from blogs, shopping cart contents, e-mails and a history of the Web pages visited.

The new Web language “gives trackers one more bucket to put tracking information into,” said Hakon Wium Lie, the chief technology officer at Opera, a browser company.

Or as Pam Dixon, the executive director of the World Privacy Forum in California, said: “HTML 5 opens Pandora’s box of tracking in the Internet.”

Representatives from the World Wide Web Consortium say they are taking questions about user privacy very seriously. The organization, which oversees the specifications developers turn to for the new Web language, will hold a two-day workshop on Internet technologies and privacy.

Labels:

internet,

pc security,

web

Thursday, September 30, 2010

PC SECURITY - Biblical Computer Worm?

I debated whether to post this here or on my political blog, but since this is in the context of PC Security and can give us an idea of what is possible, "here" won out.

"In a Computer Worm, a Possible Biblical Clue" by JOHN MARKOFF and DAVID E. SANGER, New York Times 9/29/2010

Excerpt

ALSO

"SECURITY - World Industrial Security Threat?"

"Israeli Test on Worm Called Crucial in Iran Nuclear Delay" by WILLIAM J. BROAD, JOHN MARKOFF, and DAVID E. SANGER; New York Times 1/15/2011

"In a Computer Worm, a Possible Biblical Clue" by JOHN MARKOFF and DAVID E. SANGER, New York Times 9/29/2010

Excerpt

Deep inside the computer worm that some specialists suspect is aimed at slowing Iran’s race for a nuclear weapon lies what could be a fleeting reference to the Book of Esther, the Old Testament tale in which the Jews pre-empt a Persian plot to destroy them.

That use of the word “Myrtus” — which can be read as an allusion to Esther — to name a file inside the code is one of several murky clues that have emerged as computer experts try to trace the origin and purpose of the rogue Stuxnet program, which seeks out a specific kind of command module for industrial equipment.

Not surprisingly, the Israelis are not saying whether Stuxnet has any connection to the secretive cyberwar unit it has built inside Israel’s intelligence service. Nor is the Obama administration, which while talking about cyberdefenses has also rapidly ramped up a broad covert program, inherited from the Bush administration, to undermine Iran’s nuclear program. In interviews in several countries, experts in both cyberwar and nuclear enrichment technology say the Stuxnet mystery may never be solved.

ALSO

"SECURITY - World Industrial Security Threat?"

"Israeli Test on Worm Called Crucial in Iran Nuclear Delay" by WILLIAM J. BROAD, JOHN MARKOFF, and DAVID E. SANGER; New York Times 1/15/2011

Labels:

computer worms virus,

pc security

Wednesday, September 29, 2010

SOFTWARE - OpenOffice News

"New bid for freedom by OpenOffice" by Sue Gee, I-Programmer 9/28/2010

I was wondering if this sort of thing would happen when Oracle bought Sun Microsystems.

Oracle = big-money business NOT interested in supporting non-profit open source community.

UPDATE

"Oracle kicks LibreOffice supporters out of OpenOffice" by Steven J. Vaughan-Nichols, ComputerWorld 10/19/2010

I agree with Steven's last statement. Oracle corporate leaders are just dumb. They just don't understand that open-source means that they do NOT own the source-code for the software. The source-code belongs to the community.

The open source community behind the free OpenOffice productivity suite is to create an independent Document Foundation and to rebrand its software as LibreOffice.

This move is being seen as an attempt to distance itself from Oracle which has so far declined to donate the OpenOffice brand to the project.

According to the new foundation's first official press release:

"After ten years' successful growth with Sun Microsystems as founding and principle sponsor, the project launches an independent foundation called The Document Foundation, to fulfill the promise of independence written in the original charter"

The Document Foundation has received support from almost the entire OpenOffice programming community, including Novell, Red Hat and Google, leaving only Oracle with the original OpenOffice repository. The Foundation said that it had invited Oracle to become a member of the new organization, and to donate the brand it acquired with Sun Microsystems 18 months ago but that until a decision is reached the LibreOffice brand will be used to refer to the Document Foundation's software development efforts.

Speaking for the group of volunteers involved in the development of OpenOffice, Sophie Gautier, former maintainer of the French-speaking language project said:

"We believe that the Foundation is a key step for the evolution of the free office suite, as it liberates the development of the code and the evolution of the project from the constraints represented by the commercial interests of a single company."

The beta of LibreOffice is available for download on the Document Foundations website and developers are invited to join the project and contribute to the code in the new friendly and open environment, to shape the future of office productivity suites alongside contributors who translate, test, document, support, and promote the software.

I was wondering if this sort of thing would happen when Oracle bought Sun Microsystems.

Oracle = big-money business NOT interested in supporting non-profit open source community.

UPDATE

"Oracle kicks LibreOffice supporters out of OpenOffice" by Steven J. Vaughan-Nichols, ComputerWorld 10/19/2010

Well, that didn't take long. When The Document Foundation (TDF) created LibreOffice from OpenOffice's code, they let the door open for Oracle, OpenOffice's main stake-owner, to join them. Oracle's reply was to tell anyone involved with LibreOffice to get the heck out of OpenOffice.

This isn't too much of a surprise. Oracle made it clear that wouldn't be joining with The Document Foundation in working on LibreOffice.

What I did find surprising is that Oracle turned a fork into a fight. In a regularly scheduled OpenOffice.org community council meeting on Oct. 14, council chair and Oracle employee Louis Suárez-Potts wrote, "I would like to propose that the TDF members of the CC consider the points those of us who have not joined TDF have made about conflict of interest and confusion ... I would further ask them to resign their offices, so as to remove the apparent conflict of interest their current representational roles produce."

These OpenOffice.org council members, who are also TDF leaders, include Charles H. Schulz, a major OpenOffice.org contributor for almost ten years; Christoph Noack, co-leader of the OpenOffice User Experience Project; and Cor Nouws, a well-known OpenOffice developer with more than six years of experience in the project. In short, these aren't just leaders — they're important OpenOffice developers.

They haven't declared yet what they'll do to this de facto ultimatum. It seems to me though that they have little choice but to leave. Certainly Oracle wants them out as soon as possible. Suárez-Potts wrote that he wanted a "final decision on your part" as soon as possible. "It is of [the] utmost importance that we do not confuse users and contributors as to what is what, as to the identity of OpenOffice.org -- or of your organization."

I can understand how Oracle wants to quickly define this matter as Oracle vs. everyone involved with LibreOffice. But it's a really dumb move.

The Document Foundation wasn't so much about setting up a rival to OpenOffice as it was about giving an important but stagnant open-source program a kick in the pants. OpenOffice was and is good, but it's not been getting significantly better in years. TDF wanted to change that.

Oracle thinks it's more important to fight with some of the people who could have been its strongest supporters than try to work with them. Dumb! Cutting off your nose to spite your face is always a mistake.

Of course, this is all a piece of Oracle's "my way or the highway" approach to all the open-source programs it inherited from Sun. Oracle may support open source in general, but it's doing a lousy job of doing what's best for the its own open-source programs.

This is going to come back to haunt Oracle. I fully expect for LibreOffice to replace OpenOffice as the number one open-source office suite and chief rival to Microsoft Office within the next twelve months.

I agree with Steven's last statement. Oracle corporate leaders are just dumb. They just don't understand that open-source means that they do NOT own the source-code for the software. The source-code belongs to the community.

Labels:

LibreOffice,

linux,

OpenOffice,

software,

ubuntu,

win xp,

windows

Monday, September 27, 2010

INTERNET - National Security vs User Freedom

"U.S. Wants to Make It Easier to Wiretap the Internet" by CHARLIE SAVAGE, New York Times 9/27/2010

Excerpt

Excerpt

Federal law enforcement and national security officials are preparing to seek sweeping new regulations for the Internet, arguing that their ability to wiretap criminal and terrorism suspects is “going dark” as people increasingly communicate online instead of by telephone.

Essentially, officials want Congress to require all services that enable communications — including encrypted e-mail transmitters like BlackBerry, social networking Web sites like Facebook and software that allows direct “peer to peer” messaging like Skype — to be technically capable of complying if served with a wiretap order. The mandate would include being able to intercept and unscramble encrypted messages.

The bill, which the Obama administration plans to submit to lawmakers next year, raises fresh questions about how to balance security needs with protecting privacy and fostering innovation. And because security services around the world face the same problem, it could set an example that is copied globally.

James X. Dempsey, vice president of the Center for Democracy and Technology, an Internet policy group, said the proposal had “huge implications” and challenged “fundamental elements of the Internet revolution” — including its decentralized design.

“They are really asking for the authority to redesign services that take advantage of the unique, and now pervasive, architecture of the Internet,” he said. “They basically want to turn back the clock and make Internet services function the way that the telephone system used to function.”

But law enforcement officials contend that imposing such a mandate is reasonable and necessary to prevent the erosion of their investigative powers.

“We’re talking about lawfully authorized intercepts,” said Valerie E. Caproni, general counsel for the Federal Bureau of Investigation. “We’re not talking expanding authority. We’re talking about preserving our ability to execute our existing authority in order to protect the public safety and national security.”

Labels:

internet,

pc security

Friday, September 24, 2010

IE8 - How to Save the Window Size

Internet Explorer has had this really annoying problem on NOT saving the its window size for a long time and it's incredible that Microsoft has not fixed it yet.

To "fix" this problem (same as in IE 7 and IE6):

I've verified this works.

To "fix" this problem (same as in IE 7 and IE6):

- Close all Internet Explorer windows except for one.

- Right-click on any link in the page and select "Open in New Window."

- Close the first browser window using the [X] in the upper right corner in the title bar.

- Resize the window manually by dragging the sides to fill the screen.

Note: Do NOT click the Maximize button, you have to do it manually. - Hold the [Ctrl] key and click the [X] in the upper right corner in the title bar.

I've verified this works.

INTERNET - Cyberwars Update

"Cyberwar Chief Calls for Secure Computer Network" by THOM SHANKER, New York Times 9/23/2010

The new commander of the military’s cyberwarfare operations is advocating the creation of a separate, secure computer network to protect civilian government agencies and critical industries like the nation’s power grid against attacks mounted over the Internet.

The officer, Gen. Keith B. Alexander, suggested that such a heavily restricted network would allow the government to impose greater protections for the nation’s vital, official on-line operations. General Alexander labeled the new network “a secure zone, a protected zone.” Others have nicknamed it “dot-secure.”

It would provide to essential networks like those that tie together the banking, aviation, and public utility systems the kind of protection that the military has built around secret military and diplomatic communications networks — although even these are not completely invulnerable.

For years, experts have warned of the risks of Internet attacks on civilian networks. An article published a few months ago by the National Academy of Engineering said that “cyber systems are the ‘weakest link’ in the electricity system,” and that “security must be designed into the system from the start, not glued on as an afterthought.”

General Alexander, an Army officer who leads the military’s new Cyber Command, did not explain just where the fence should be built between the conventional Internet and his proposed secure zone, or how the gates would be opened to allow appropriate access to information they need every day. General Alexander said the White House hopes to complete a policy review on cyber issues in time for Congress to debate updated or new legislation when it convenes in January.

General Alexander’s new command is responsible for defending Defense Department computer networks and, if directed by the president, carrying out computer-network attacks overseas.

But the military is broadly prohibited from engaging in law enforcement operations on American soil without a presidential order, so the command’s potential role in assisting the Department of Homeland Security, the Federal Bureau of Investigation or the Department of Energy in the event of a major attack inside the United States has not been set down in law or policy.

“There is a real probability that in the future, this country will get hit with a destructive attack, and we need to be ready for it,” General Alexander said in a roundtable with reporters at the National Cryptologic Museum here at Fort Meade in advance of his Congressional testimony on Thursday morning.

“I believe this is one of the most critical problems our country faces,” he said. “We need to get that right. I think we have to have a discussion about roles and responsibilities: What’s the role of Cyber Command? What’s the role of the ‘intel’ community? What’s the role of the rest of the Defense Department? What’s the role of D.H.S.? And how do you make that team work? That’s going to take time.”

Some critics have questioned whether the Defense Department can step up protection of vital computer networks without crashing against the public’s ability to live and work with confidence on the Internet. General Alexander said, “We can protect civil liberties and privacy and still do our mission. We’ve got to do that.”

Speaking of the civilian networks that are at risk, he said: “If one of those destructive attacks comes right now, I’m focused on the Defense Department. What are the responsibilities — and I think this is part of the discussion — for the power grid, for financial networks, for other critical infrastructure? How do you protect the country when it comes to that kind of attack, and who is responsible for it?”

As General Alexander prepared for his testimony before the House Armed Services Committee, the ranking Republican on the panel, Howard P. McKeon of California, noted the Pentagon’s progress in expanding its cyber capabilities.

But he said that “many questions remain as to how Cyber Command will meet such a broad mandate” given the clear “vulnerabilities in cyberspace.”

The committee chairman, Rep. Ike Skelton, Democrat of Missouri, said that “cyberspace is an environment where distinctions and divisions between public and private, government and commercial, military and nonmilitary are blurred.” He said that it is important “that we engage in this discussion in a very direct way and include the public.”

Labels:

cybersecurity,

internet,

pc security

Wednesday, September 22, 2010

UBUNTU - New App Review Process

"The Ubuntu application review process" by Corbet, LWN.net 9/22/2010

Canonical has announced a mechanism by which applications will be reviewed for possible acceptance into the Ubuntu Software Center. "Recently we formed a community-driven Application Review Board that is committed to providing high quality reviews of applications submitted by application authors to ensure they are safe and work well. Importantly, only new applications that are not present in an existing official Ubuntu repository (such as main/universe) are eligible in this process (e.g a new version of an application in an existing official repository is not eligible). Also no other software can depend on the application being submitted (e.g. development libraries are not eligible), only executable applications (and content that is part of them) are eligible, and not stand-alone content, documentation or media, and applications must be Open Source and available under an OSI approved license."

Monday, September 20, 2010

SECURITY - The Bad Idea From Intel

"Intel's walled garden plan to put A/V vendors out of business" by Jon Stokes, Ars Technica 9/14/2010

Of course, my post title is my own opinion of this idea (note my bold-blue highlight above).

In describing the motivation behind Intel's recent purchase of McAfee for a packed-out audience at the Intel Developer Forum, Intel's Paul Otellini framed it as an effort to move the way the company approaches security "from a known-bad model to a known-good model." Otellini went on to briefly describe the shift in a way that sounded innocuous enough--current A/V efforts focus on building up a library of known threats against which they protect a user, but Intel would love to move to a world where only code from known and trusted parties runs on x86 systems. It sounds sensible enough, so what could be objectionable about that?

Depending how enamored you are of Apple's App Store model, where only Apple-approved code gets to run on your iPhone, you may or may not be happy in Intel's planned utopia. Because, in a nutshell, the App Store model is more or less what Intel is describing. Regardless of what you think of the idea, its success would have at least two unmitigated upsides: 1) everyone will get vPro by default (i.e., it seems hard to imagine that Intel will still charge for security as an added feature), and 2) it would put every security company (except McAfee, of course), out of business. (The second one is of course a downside for security vendors, but it's an upside for users who despise intrusive A/V software.)

From a jungle to an ecosystem of walled gardens

For a company that made its fortune on the back of the x86 ISA, the shift that Intel envisions is nothing less than tectonic. x86 became the world's most popular ISA in part because anything and everything could (and eventually would) run on it. And don't forget Microsoft's role in all of this—remember the "Wintel" duopoly of years gone by? Like x86, Windows ended up being the default OS for the desktop software market, and everything else was niche. And, like x86, Windows spread because everyone who wanted it could get it and run anything they wanted on it.

The fact that x86 was so popular and open gave rise to today's A/V industry, where security companies spend 100 percent of their effort trying to identify and thwart every conceivable form of bad behavior. This approach is extremely labor-intensive and failure-prone, which the security companies love because it keeps them in business.

What Intel is proposing is that the entire x86 ecosystem move to the opposite approach, and run only the code that has been blessed as safe by some trusted authority.

Now, there are a few ways that this is likely to play out, and none of these options are mutually exclusive.

One way should be clear from Intel's purchase of McAfee: the company plans to have two roles as a security provider: a component provider role, and an end-to-end platform/software/services provider role. First, there's the company's traditional platform role, where Intel provides OEMs the basic tools for building their own walled gardens. Intel has been pushing this for some time, mainly in its ultramobile products. If anyone is using Intel's ingredients (an app store plus hardware with support for running only signed code) to build their own little version of the App Store ecosystem, it's probably one of the European or Asian carriers that sells rebadged Intel mobile internet devices (MIDs). It's clear that no one is really doing this on the desktop with vPro, though.

Then there's the McAfee purchase, which shows that Intel plans to offer end-to-end security solutions, in addition to providing the pieces out of which another vendor can build their own. So with McAfee, Intel probably plans to offer a default walled garden option, of sorts. At the very least, it's conceivable that Intel could build its own secure app store ecosystem, where developers send code to McAfee for approval and distribution. In this model, McAfee would essentially act as the "Apple" for everyone making, say, MeeGo apps.

In the world described above, the x86 ecosystem slowly transitions from being a jungle to network of walled gardens, with Intel tending one of the largest gardens. If you're using an x86-based GoogleTV, you might participate in Google's walled garden, but not be able to run any other x86 code. Or, if you have an Intel phone from Nokia, you might be stuck in the MeeGo walled garden.

A page from the Web

None of the walled garden approaches described above sound very attractive for the desktop, and they'll probably be rejected outright by many Linux and open-source users. But there is another approach, one which Intel might decide to pursue on the desktop. The company could set up a number of trusted signing authorities for x86 code, and developers could approach any one of them to get their code signed for distribution. This is, of course, the same model used on the Web, where e-commerce sites submit an application for an https certificate.

This distributed approach seems to work well enough online, and I would personally be quite happy to use it on all my PCs. I would also love to hear from users who object to this approach—please jump into the comments below and sound off.

Pick any two

Obviously, security has always been a serious problem in the wild and woolly world of x86 and Windows. This is true mainly because Wintel is the biggest animal in the ecosystem, so bad actors get the most bang for their buck by targeting it. So why has Intel suddenly gotten so serious about it that the company is making this enormous change to the very nature of its core platform?

The answer is fairly straightforward: Intel wants to push x86 into niches that it doesn't currently occupy (phones, appliances, embedded), but it can't afford to take the bad parts along for the ride. Seriously, if you were worried about a particular phone or TV being compromised, you just wouldn't buy it. Contrast this to the Windows desktop, which many users may be forced to use for various reasons.

So Intel's dilemma looks like this: open, secure, ubiquitous—pick any two, but given the economics of the semiconductor industry, "ubiquitous" has to be one of them. Open and ubiquitous have gotten Intel where it is today, and the company is betting that secure and ubiquitous can take it the rest of the way.

Of course, my post title is my own opinion of this idea (note my bold-blue highlight above).

Labels:

networking,

pc security,

win xp,

windows

Thursday, September 16, 2010

INTERNET - Internet Explorer 9

"Review: IE9 May Be Best Version Yet" by Jim Rapoza, InformationWeek 9/16/2010

Excerpt

Humm..... Didn't Microsoft claim the same for Vista?

Lets see how much WANTED old features they made hard to enable. Not all of us want the fantasist thing on the block. AND will it work well with WinXP?

Excerpt

That's because, while IE 9 is much improved over previous versions of IE, very few of the new features in IE 9 are new to the current browser market. In fact, most of the new features in this beta release are simply a matter of IE catching up to Chrome, Firefox, Safari and Opera.

Humm..... Didn't Microsoft claim the same for Vista?

Lets see how much WANTED old features they made hard to enable. Not all of us want the fantasist thing on the block. AND will it work well with WinXP?

Wednesday, September 1, 2010

INTERNET - NNTP Usenet Newsgroup System

This article is about the Usenet and their Newsgroups that use NNTP.

This system is the outgrowth of the old Bulletinboard system of the DOS days. Think of it as the electronic version of the old office or school cork-board bulletin boards where ANYONE can post notices and the like.

NNTP

As you can see, this is only a specification that can be use by ANY provider via a NNTP Server.

Usenet (aka Newsnet)

This is the distribution system. Note that NO one entity owns this system. It's public.

ISPs usually have their own NNTP Servers and included access as part of their service. But NNTP Servers are very resource intensive. Both in storage space needed and maintenance, including the cost evolved. This is why many ISPs have dropped their NNTP service.

The industry is shifting to specialized NNTP (Usenet) Providers. They do not provide connection the the Internet, just the Usenet service. Many have a subscription fee, which is how the make their money. Example, I use Forte's APN which charges me a monthly fee.

Newsgroups

Bold emphasis mine

Examples of Newsgroups:

The main reason I am posting this article is there is a misleading notice being propagated in blogs and Usenet posts that the Microsoft Newsgroups are going away. NOT true.

Microsoft does not own nor control the Usenet system. So Newsgroups in the microsoft.public. series are NOT going to go away. The ONLY way this would happen is that EVERY Usenet Provider worldwide dropped these groups from their servers.

What is happening is Microsoft is dropping the microsoft.public. series form THEIR Usenet servers. They have switched to their Live Forums.

To read Newsgroup posts, you do have to use a Usenet capable client.

Outlook Express includes this feature. There's also clients like Thunderbird (freeware, eMail + Usenet), and Agent (eMail + Usenet) that I use at home.

Note that the examples above are both eMail and Usenet clients. This is because Newsgroup posts are very close to eMails. In fact in Newsgroup posts format the only difference is the "headers." Example: eMails have a To: email-address/name while Newsgroup posts are to a group like alt.politics.usa.

This system is the outgrowth of the old Bulletinboard system of the DOS days. Think of it as the electronic version of the old office or school cork-board bulletin boards where ANYONE can post notices and the like.

NNTP

The Network News Transfer Protocol (NNTP) is an Internet application protocol used for transporting Usenet news articles (netnews) between news servers and for reading and posting articles by end user client applications. Brian Kantor of the University of California, San Diego and Phil Lapsley of the University of California, Berkeley authored RFC 977, the specification for the Network News Transfer Protocol, in March 1986.

As you can see, this is only a specification that can be use by ANY provider via a NNTP Server.

Usenet (aka Newsnet)

Usenet is a worldwide distributed Internet discussion system. It developed from the general purpose UUCP architecture of the same name.

Duke University graduate students Tom Truscott and Jim Ellis conceived the idea in 1979 and it was established in 1980.[1] Users read and post messages (called articles or posts, and collectively termed news) to one or more categories, known as newsgroups. Usenet resembles a bulletin board system (BBS) in many respects, and is the precursor to the various Internet forums that are widely used today; and can be superficially regarded as a hybrid between email and web forums. Discussions are threaded, with modern news reader software, as with web forums and BBSes, though posts are stored on the server sequentially.

This is the distribution system. Note that NO one entity owns this system. It's public.

ISPs usually have their own NNTP Servers and included access as part of their service. But NNTP Servers are very resource intensive. Both in storage space needed and maintenance, including the cost evolved. This is why many ISPs have dropped their NNTP service.

The industry is shifting to specialized NNTP (Usenet) Providers. They do not provide connection the the Internet, just the Usenet service. Many have a subscription fee, which is how the make their money. Example, I use Forte's APN which charges me a monthly fee.

Newsgroups

A Usenet newsgroup is a repository usually within the Usenet system, for messages posted from many users in different locations. The term may be confusing to some, because it is usually a discussion group. Newsgroups are technically distinct from, but functionally similar to, discussion forums on the World Wide Web. Newsreader software is used to read newsgroups.

Despite the advent of file-sharing technologies such as BitTorrent, as well as the increased use of blogs, formal discussion forums, and social networking sites, coupled with a growing number of service providers blocking access to Usenet (see main article) newsgroups continue to be widely used.

Bold emphasis mine

Examples of Newsgroups:

- microsoft.public.windows.vista.general

- alt.politics.usa

- sci.space.station

- comp.sys.ibm.pc.games.rpg

The main reason I am posting this article is there is a misleading notice being propagated in blogs and Usenet posts that the Microsoft Newsgroups are going away. NOT true.

Microsoft does not own nor control the Usenet system. So Newsgroups in the microsoft.public. series are NOT going to go away. The ONLY way this would happen is that EVERY Usenet Provider worldwide dropped these groups from their servers.

What is happening is Microsoft is dropping the microsoft.public. series form THEIR Usenet servers. They have switched to their Live Forums.

To read Newsgroup posts, you do have to use a Usenet capable client.

Outlook Express includes this feature. There's also clients like Thunderbird (freeware, eMail + Usenet), and Agent (eMail + Usenet) that I use at home.

Note that the examples above are both eMail and Usenet clients. This is because Newsgroup posts are very close to eMails. In fact in Newsgroup posts format the only difference is the "headers." Example: eMails have a To: email-address/name while Newsgroup posts are to a group like alt.politics.usa.

Labels:

email,

internet,

Newsgroups,

NNTP,

Usenet

Friday, August 27, 2010

PC SECURITY - Windows DLL Exploits

"Windows DLL exploits boom; hackers post attacks for 40-plus apps" by Gregg Keizer, ComputerWorld 8/25/2010

Excerpts

CAUTION - Make sure you understand this MS tool BEFORE loading. If you are NOT SURE, just wait until "patches" (Microsoft Updates) come through.

Needless to say, if you are running a top-of-the-line Antivirus/Antispyware Utility, they should protect you already.

Excerpts

Publish exploits to subvert Firefox, Chrome, Word, Photoshop, Skype, dozens more

Some of the world's most popular Windows programs are vulnerable to attacks that exploit a major bug in the way they load critical code libraries, according to sites tracking attack code.

Among the Windows applications that are vulnerable to exploits that many have dubbed "DLL load hijacking" are the Firefox, Chrome, Safari and Opera browsers; Microsoft's Word 2007; Adobe's Photoshop; Skype; and the uTorrent BitTorrent client.

"Fast and furious, incredibly fast," said Andrew Storms, director of security operations for nCircle Security, referring to the pace of postings of exploits that target the vulnerability in Windows software. Called "DLL load hijacking" by some, the exploits are dubbed "binary planting" by others.

On Monday, Microsoft confirmed reports of unpatched vulnerabilities in a large number of Windows programs, then published a tool it said would block known attacks. The flaws stem from the way many Windows applications call code libraries -- dubbed "dynamic-link library," or "DLL" -- that give hackers wiggle room they can exploit by tricking an application into loading a malicious file with the same name as a required DLL.

If attackers can dupe users into visiting malicious Web sites or remote shares, or get them to plug in a USB drive -- and in some cases con them into opening a file -- they can hijack a PC and plant malware on it.

Even before Microsoft described the problem, published its protective tool, and said it could not address the wide-ranging issue by patching Windows without crippling countless program, researcher HD Moore posted tools to find vulnerable applications and generate proof-of-concept code.

----

Until patches are available, Microsoft has urged users to download the free tool that blocks locks DLLs from loading from remote directories, USB drives, Web sites and an organization's network.

CAUTION - Make sure you understand this MS tool BEFORE loading. If you are NOT SURE, just wait until "patches" (Microsoft Updates) come through.

Needless to say, if you are running a top-of-the-line Antivirus/Antispyware Utility, they should protect you already.

Thursday, August 26, 2010

HAPPY BIRTHDAY - The PC is 29

Birth of the PC

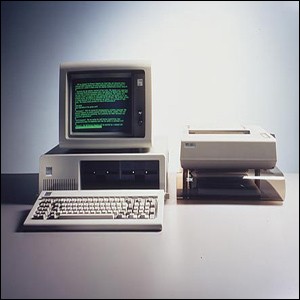

Birth of the PCThe IBM PC was announced to the world on 12 August 1981, helping drive a revolution in home and office computing.

The PC came in three versions; the cheapest of which was a $1,565 home computer.

The machine was developed by a 12-strong team headed by Don Estridge.

I owned one of these, just as you see here.

But it was a replacement for my supper-duper Tandy TRS-80 (aka Radio Shack Trash-80) with max 64kb memory, two 180kb 8" external floppy drives, B&W 80x25 monitor (no graphics), and 1200 baud external modem. WOW!

Am I dating myself?

Labels:

computers,

IBM,

pc hardware,

technology

Tuesday, August 24, 2010

HARDWARE - Motherboard Info

This post is about the best way to get hardware information about your Motherboard.

My recommended utility is CPU-Z form the CPUID people.

You can read the specs from their page. Essentially, you need a newer motherboard that has the features to allow CPU-Z to access the information.

Below are screenshots for my home WinXP SP3 Pro Desktop system are below so you can see what you get using this utility.

Click screenshots for better view

("HT" = Hyper Threading = near Due Core performance)

My recommended utility is CPU-Z form the CPUID people.

You can read the specs from their page. Essentially, you need a newer motherboard that has the features to allow CPU-Z to access the information.

Below are screenshots for my home WinXP SP3 Pro Desktop system are below so you can see what you get using this utility.

Click screenshots for better view

("HT" = Hyper Threading = near Due Core performance)

Labels:

cpu,

memory,

motherboard,

pc hardware,

windows

Monday, August 23, 2010

INTERNET - The Information-Age Replicator

"The Third Replicator" by SUSAN BLACKMORE, New York Times 8/22/2010

Excerpt

Excerpt

All around us information seems to be multiplying at an ever increasing pace. New books are published, new designs for toasters and i-gadgets appear, new music is composed or synthesized and, perhaps above all, new content is uploaded into cyberspace. This is rather strange. We know that matter and energy cannot increase but apparently information can.

It is perhaps rather obvious to attribute this to the evolutionary algorithm or Darwinian process, as I will do, but I wish to emphasize one part of this process — copying. The reason information can increase like this is that, if the necessary raw materials are available, copying creates more information. Of course it is not new information, but if the copies vary (which they will if only by virtue of copying errors), and if not all variants survive to be copied again (which is inevitable given limited resources), then we have the complete three-step process of natural selection (Dennett, 1995). From here novel designs and truly new information emerge. None of this can happen without copying.

I want to make three arguments here.

The first is that humans are unique because they are so good at imitation. When our ancestors began to imitate they let loose a new evolutionary process based not on genes but on a second replicator, memes. Genes and memes then coevolved, transforming us into better and better meme machines.

The second is that one kind of copying can piggy-back on another: that is, one replicator (the information that is copied) can build on the products (vehicles or interactors) of another. This multilayered evolution has produced the amazing complexity of design we see all around us.

The third is that now, in the early 21st century, we are seeing the emergence of a third replicator. I call these temes (short for technological memes, though I have considered other names). They are digital information stored, copied, varied and selected by machines. We humans like to think we are the designers, creators and controllers of this newly emerging world but really we are stepping stones from one replicator to the next.

Labels:

computers,

information age,

internet

Thursday, August 19, 2010

SECURITY - Big-Money Buyout

"Dealtalk: McAfee buy may trigger more tech security M&A" by Bill Rigby & Paritosh Bansal, Reuters 8/19/2010

Excerpt

Big money for corporations, bad news for users. Why, you really think cost and tech support will improve or be cheaper? I would guess not. After all where do you think these companies are going to recoup the cost of acquisitions.

Excerpt

Intel Corp's (INTC.O) surprise $7.7 billion bid for McAfee Inc (MFE.N) may trigger more deals as competitors scramble for a piece of the rapidly growing software security sector.

Technology giants Oracle Corp (ORCL.O), Hewlett-Packard Co (HPQ.N), IBM Corp (IBM.N) and EMC Corp (EMC.N) -- which are all looking to expand the "stack" of hardware and software they offer corporate clients -- could move to counter Intel's emergence.

That puts the spotlight on the world's biggest software security company, Symantec Corp (SYMC.O), and a number of smaller companies such as Checkpoint Systems Inc (CKP.N), Sourcefire Inc (FIRE.O), Websense Inc (WBSN.O) and SafeNet.

"We're in the early stages of a major consolidation in software, particularly in security," said FBR Capital Markets analyst Daniel Ives. "This deal speaks to the convergence of hardware and software, which is becoming increasingly more important as the industry consolidates."

Big money for corporations, bad news for users. Why, you really think cost and tech support will improve or be cheaper? I would guess not. After all where do you think these companies are going to recoup the cost of acquisitions.

Labels:

pc security,

windows

Tuesday, August 17, 2010

INTERNET - Reconnecting With Life

PBS Newshour 8/16/2010

I learned something from an Executive Secretary, if you feel swamped schedule your day. Which applies to more than just internet life.

Her examples (computer):

- At work: ONLY check eMail on arrival, 1:00pm, just before leaving for the day

- At work: Browse the WEB during lunch (1hr for her)

- At home, workdays: Check eMail during breakfast (just in case something needs doing that day), no WEB browsing

- At home, weekends: Check eMail once a day ONLY, and limit WEB browsing to ONE day, for a few hours, both AFTER dealing with family needs

- At home: Go off-line when you do NOT need to use the internet (something I suggested)

NOTE: There is a way to go off-line WITHOUT powering-down your broadband modem/router.

- Open Network Connections

- Right-click your broadband-connection as listed and select Properties

- In the General tab, ensure BOTH checkboxes at bottom are check (makes Connect Icon visible in Taskbar Tray at ALL times)

- Exit back to Network Connections dialog and left-click + drag your broadband-connection to desktop and make a shortcut (you can move or copy this anywhere, like your Quick Launch bar)

- To go off-line: Right-click the Connect Icon and select Disable

- To go online: Use your broadband-connection shortcut, which will enable the connection

Thursday, August 12, 2010

INTERNET - NET Neutrality

"Net Neutrality Is Critical For Innovation" by Albert Wenger, Business Insider SAI 8/12/2010

I am glad to see the net neutrality debate raging. As Union Square Ventures and on our personal blogs (AVC, Continuations) we have long been proponents of net neutrality (the USV link is from 2006!).

Our own bias here is clear: we are pro-startup and pro-innovation. Both are of course essential to the venture capital business, but we believe they are also the lifeblood of the economy. Surprisingly, a lot of people who argue against net neutrality don’t seem to make the connection to innovation and startups at all. That is even more surprising when the criticism comes from someone like Henry Blodget who is clearly the beneficiary of the level playing field provided by net neutrality.

As for the Google-Verizon proposal, my partner Brad has done a terrific job pointing out two of the key problems. I want to go a step further though. Much as I am glad that Google has been a proponent of some aspects of net neutrality, Google is no longer a startup itself! Google’s ability to cross-subsidize new markets from its amazing core business poses a potential threat to innovation that I have written about before. This access to nearly limitless funds is especially important to keep in mind when looking at net neutrality.

Because there are a lot of subtleties once one drills down, it is easy to lose sight of the most basic principle that net neutrality is trying to achieve: the ability for innovative startups to deliver their content and services on a level playing field with incumbents. It is easy to forget this because we have actually de facto had net neutrality, which is what has allowed the creation and rise of services and companies such as Google in the first place. It is hard to argue that the Internet has not unleashed a torrent of innovation that has hugely benefited endusers (at the cost of disrupting some existing businesses). Net neutrality is all about codifying this existing state of the Internet and preventing a distorted playing field that favors incumbents.

Imagine for a moment an Internet in which Google can pay Verizon and others to deliver Youtube videos faster than video content from other sites, including that of your favorite startup. Given Youtube’s existing scale and Google’s ability to cross subsidize, this would forever cement Youtube as the source of Internet video. I fail to see how this could be in anybody’s interest other than Youtube’s and Verizon’s (Business Insider included). That is exactly what the current Google-Verizon proposal would allow for the wireless Internet. Now ask yourself what will be more important in the near future — wireline or wireless delivery of the Internet?The argument that competition among wireless carriers will take care of this seems disingenuous at best when we have all of four carriers left of which two control the bulk of the market. This is an oligopoly in which (even without any talk among carriers), the natural game theoretic equilibrium is one where none of the carriers provides net neutrality and all accept payments from incumbents.

So what could actual net neutrality look like when codified? It could be as simple as saying: carriers can charge people only for their bandwidth (up and down) and cannot accept other payments. In this scheme endusers still pay for bandwidth (as they do today) and bandwidth caps are possible. Youtube still pays for its bandwidth. But Youtube cannot pay for faster delivery, cannot pay for being excluded from consumers’ bandwidth caps etc. As it turns out — and this is again critical to emphasize — that is the status quo! A status quo that has brought us tremendous innovation and sufficient investment by carriers in bandwidth despite their griping.

Finally, to all those who would say that the government doesn’t regulate what people can pay and cannot pay for in other areas, that is of course wrong. You can pay a taxi for taking you from A to B, but paying the taxi to go above the speed limit is not legal. You can pay to get a liver transplant, but you can’t pay to get one faster than others. We full well realize that safety and ethical concerns matter. So does innovation. Net neutrality is all about protecting innovation while still providing the funds for carriers to invest in network growth.

Labels:

internet,

net neutrality,

web,

wireless

INTERNET - More on Google/Verizon Attempt to Hijack the Wireless WEB

"Web Plan Is Dividing Companies" by CLAIRE CAIN MILLER & BRIAN STELTER, New York Times 8/11/2010

At least Mr. Diller is admitting the truth about the "other" executives. It all about GREED.

In an emerging battle over regulating Internet access, companies are taking sides.

Facebook, one of the companies that has flourished on the open Internet, indicated Wednesday that it did not support a proposal by Google and Verizon that critics say could let providers of Internet access chip away at that openness.

Meanwhile an executive of AT&T, one of the companies that stands to profit from looser regulations, called the proposal a “reasonable framework.”

Most media companies have stayed mute on the subject, but in an interview this week, the media mogul Barry Diller called the proposal a sham.

And outside of technology circles, most people have not yet figured out what is at stake.

The debate revolves around net neutrality, which in the broadest sense holds that Internet users should have equal access to all types of information online, and that companies offering Internet service should not be able to give priority to some sources or types of content.

In a policy statement on Monday, Google and Verizon proposed that regulators enforce those principles on wired connections but not on the wireless Internet. They also excluded something they called “additional, differentiated online services.”

In other words, on mobile phones or on special access lanes, carriers like Verizon and AT&T could charge content companies a toll for faster access to customers or, some analysts worry, block certain services from reaching customers altogether.

Opponents of the proposal say that the Internet, suddenly, would not be so open anymore.

“All of our life goes through this network, increasingly, and if you can’t reach your boss or get to your remotely stored work, or it’s so slow that you can’t get it done before you give up and you go to bed, that’s a problem,” said Allen S. Hammond IV, director of the Broadband Institute of California at Santa Clara University School of Law. “People need to understand that’s what we’re debating here.”

Decisions about net neutrality rest with the Federal Communications Commission and legislators, and full-throated lobbying campaigns are already under way on all sides. The Google-Verizon proposal was essentially an attempt to frame the debate.

It set off a flood of reaction, much of it negative, from Web companies and consumer advocacy groups. In the most extreme situation that opponents envision, two Internets could emerge — the public one known today, and a private one with faster lanes and expensive tolls.

Google and Verizon defended the exemptions by saying that they were giving carriers the flexibility they need to ensure that the Internet’s infrastructure remains “a platform for innovation.” Carriers say they need to be able to manage their networks as they see fit and generate revenue to expand them.

AT&T said in a statement Wednesday night that “the Verizon-Google agreement demonstrates that it is possible to bridge differences on this issue.”

Much of the debate rests on the idea of paid “fast lanes.” Content companies, the theory goes, would have to pay for favored access to a carrier’s customers, so some Web sites or video services could load faster than others.